On 28 June I attended a workshop on User Research as part of the User Experience conference organised by the Bloomsbury Learning Environment and University of London.

In the opening keynote, Jane Lessiter from i2 Media Research introduced the role of user testing and the various ways it can be carried out. She talked about the need to understand the different factors that might affect results, such as the context and the location for the research – should you carry out user testing in the home, a lab, a public place? She also emphasised the importance of considering what we are testing and the value of understanding where people are in their journey, whether that is in their life or in relation to the product they are testing. She presented examples of user testing from the work carried out by i2 Media Research:

- Interviews – for a Pensions project where semi-structured interviews were carried out at home with a questionnaire. The participants were asked to develop a timeline of key life events associated with significant changes in income. They were also asked to prioritise a list of things relating to pensions and work in terms of their importance.

- Focus group – for understanding the impact of immersive environments – focus groups aimed to establish what impact means with a selection of stakeholders – those who fund immersive environments, those who create them and end users with/without experience.

- Cognitive walk-throughs – for online weather service who wanted people to convert to new weather app. Participants were asked to carry out a series of tasks e.g. “search for a place, is it going to rain?” and were recorded using a face reader to capture the participant’s emotions during task. Another example included watching people setting up digital radios/smart meter with participants with sight loss.

- Empirical lab trials – for testing video enhancement for colour blind users a repeated measures design was used whereby tasks were repeated using different settings or environments. Participants carried out tasks in different orders to avoid order effect on the results. The focus here was about the acceptability of what they were seeing, rather than improvements and they were asked to judge how natural the image was.

Following the keynote were three case studies about approaches to evaluating the user experience and user testing:

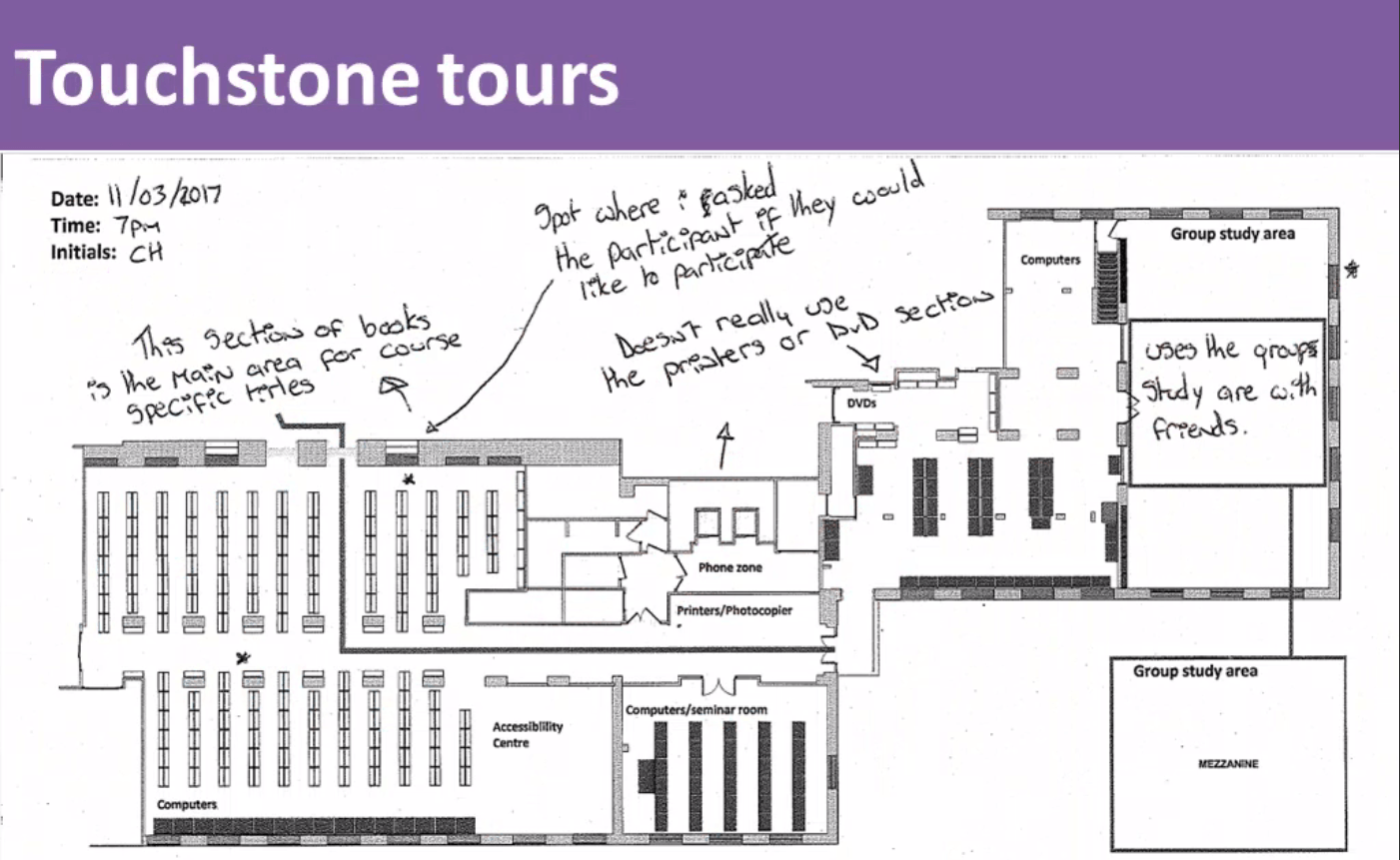

User experience research at Birkbeck Library – Steven Ellis and Emma Illingworth presented their approach to evaluating student use of the Birkbeck Library. They wanted an approach that was more collaborative and avoided survey fatigue and received funding that enabled them to employ students to help facilitate their user experience activities. The evaluation was based on observations (to see where people went in the library), cognitive mapping, touchstone tours and focus groups. In the touchstone tours the facilitator walked around the library with the student and discussed the various parts of the library. The discussions were recorded on a printed copy of the floor plan. All of the data was collected over three weeks, with the first three activities being run by the student facilitators.

User testing for Birkbeck web redesign – Naomi Bain described the process she had taken for evaluating the redesign of Birkbeck’s webpages. As the development work was ongoing, it was difficult to know when things would be ready for testing so user testing was carried out in phases:

- Phase 1 showed PDFs of new design and asked people to describe it using three positive and three negative words. This provided some initial reassurance that people felt the design was modern, vibrant and engaging. In addition, they were asked to show where they would click or type first to carry out a specific task which helped to ensure that people noticed the availability of certain features, such as menu items.

- Phase 2 used mocked up pages online with testing via both desktop and mobile phones and observed use of the page and asked them what would happen if they clicked on a particular option. Focus was on which areas people used, e.g. how did people use course search? Would people know to scroll down for more information?

- Phase 3 asked people to test an almost fully functioning version of site, with specific tasks to explore various parts of the site and find key pieces of information (e.g. open day dates, find news story containing crocodiles).

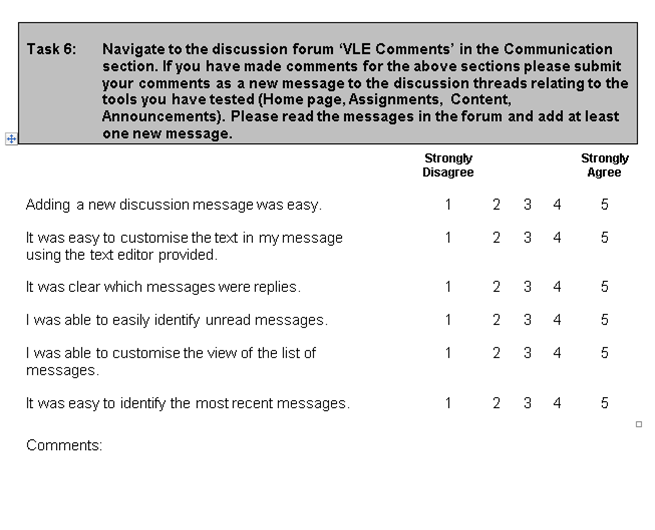

Usability testing for a VLE review – I presented the final case study, which described the user testing carried out as part of the virtual learning environment (VLE) review I led at Imperial College London. The session introduced four key areas of testing we carried out for the review – usability testing, technical testing, migration testing and functionality testing – and then focussed specifically on the usability testing that had been carried out with staff and students. This looked at how the sessions were organised and the usability questionnaires and scoring used. Despite this review taking place from 2010-2012, it was valuable for me to see that some of the techniques we used were reflected in the other presentations and even had an ‘official name’, such as the use of a repeated measures design for comparing two VLEs.

The final part of the workshop was a practical activity to design a user experience evaluation, based on the scenario of evaluating a new feature on a website at a university open day. We developed a user testing checklist, a consent form and talked about what data to collect about the individuals e.g. demographics, relationship to institution, previous experience of using, level of technical abilities, preference for finding out information (website vs telephone), use of devices. We then put our test plan into practice by reviewing an institutional website whereby the tester had to find a details for a specific course and provide feedback on their experience.

The event was really useful and it was good to hear about the different approaches to user experience research as well as to put it into practice during the workshop element of the morning. From a Ed Tech perspective, I can see potential value in including timelines and card sort activities into interviews and focus groups when understanding user requirements. In addition, the touchstone tours and cognitive mapping could be useful when evaluating learning spaces.