Yesterday, I went to my first Turnitin Summit in Newcastle. It was hosted at the BALTIC Centre for Contemporary Art just over the Gateshead Millenium Bridge. So I managed to get in some thought-provoking art, beautiful views over the city and a lush meal at the rooftop restaurant. A little taste from the pics below. But that’s not what this post is about so let’s get down to business, what the low-down on Turnitin.

Contents

Similarity schimilarity

Too many folks just want to know what score is simply unacceptable. But the similarity score is not an indicator of plagiarism. Integrity checks could differ across subjects, it may not even be appropriate in some like coding. Turnitin is aware that the similarity report is difficult to interpret, the percentage in the form of colour may add to this confusion and there is work needed around educating teaching staff on how to tailor it to their needs.

Something I learned about, which is currently under research, is on detecting the use of word spinners also known as article spinners or paraphrasing tools. This is pretty new since my days as a student and I’m not sure whether to be impressed or horrified by these tools.

Formative learning

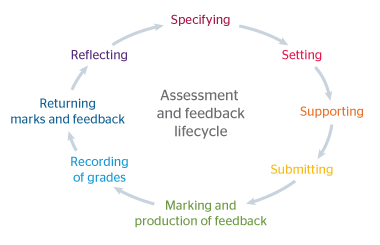

So where do integrity checks belong? Looking are the assessment lifecycle, could these tools be used to support students in their academic writing at the initial stages of assessment? A Tii plugin for Word and Google Docs could allow students to access a similarity report and identify issues on citations, paraphrasing and summarising properly to attribute sources. Is this likely to promote deep learning or would students be frantically tweaking the document until the stats improved? They are still considering whether the metadata from these tools could provide insights into the student’s learning process.

Authorship

This tool provides investigators with comparative data when suspicions of contract cheating are raised. It might be that even metadata from Tii’s plugin could feed into the student’s corpus of work. I didn’t get too much more info on this but it did make me think about how the intentions with these tools and expectations from the students can help authors write with integrity. So rather than focusing on whether students are doing their own work, there is a supportive culture that promotes original work from the learning community.

Gradescope

Now, this technology did excite me. Surely, anything that makes the lives of teaching staff easier is worth investing in?! Ideal for STEM subjects where assessments are still handed in on paper. It will have the capacity to split bulk scans into individual files, assign it to a student and detect each question using a template mark-up. Teaching staff can grade online and as answers are marked correct or penalties are applied for incorrect parts, other identical answers can be auto-graded in the same way. It has helped to cut grading times by up to 80%. If that’s the case, surely feedback could be more consistent and timely because long and arduous marking never helped anyone.

Complex grading workflows

The jury is still out on that one. There is an extended beta but no sign of the tools that will support advanced marking and moderation techniques. Watch this space (if you haven’t been for the last couple of years already)!

Finally stats

Alas, I was tasked with getting stats on downtime. From the charts below, this year’s service has improved significantly. Let’s see what happens as we approach that busy time when students are submitting work.

Thanks Sarny, a useful overview. I hadn’t heard of article spinners before and dread to think what they turn out. The Word and Google Docs plugins sound like they could be useful for students.

The plugin divided opinion for sure. Like anything, it’s making sure the students know how to use them effectively =D